I thought it’d be worth summarising the whole blog series in one easy Google-able post, so here is a summary of the 11-article rant I went on.

There is no particular order to this. It was a brain dump of all the obstacles I could see if an organisation decided to aggressively pursue an AWS first strategy. Many of them apply across the whole cloud spectrum (Is that a mixed metaphor?). I will get around to developing a road-map document to address these issues. If you’re interested in a free draft of it send me a message through your preferred social network in the right side bar.

[table th=”1″]Blog title,Issue,Description

11 problems with AWS – part 1,Data Sovereignty,Data in the cloud crosses many legal boundaries. Whose laws must you follow?

11 problems with AWS – part 2,Latency,You cannot speed up those little electrons. Those inter- and trans-continental traversals add up!

11 problems with AWS – part 3,Vendor Lock-in,All those value-adds hook you in like a heroine.

11 problems with AWS – part 4,Cost Management,Keep throwing resources at it man! The monthly bill wont look that bad!

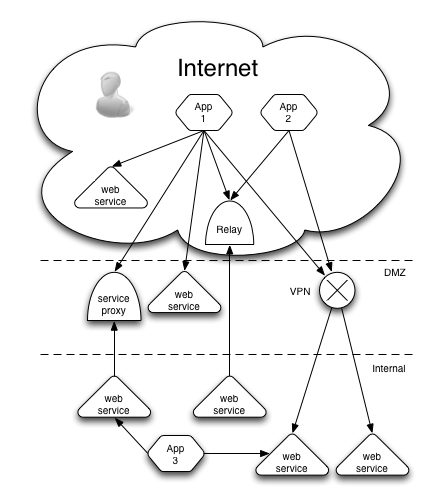

11 problems with AWS – part 5,Web Services,Distributed? This app is all over the place like a mad woman’s custard!

11 problems with AWS – part 6,Developer Skill,How good are our developers? Do they get Auto-scaling? Caching? Load balancing?

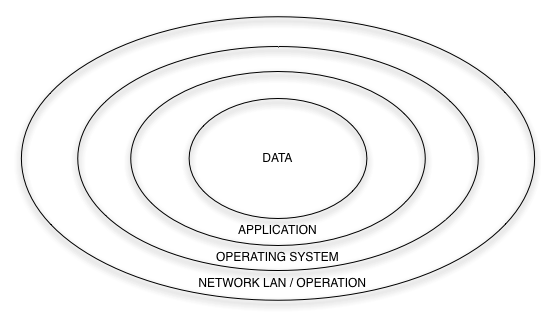

11 problems with AWS – part 7,Security,Where did you put my deepest secrets?

11 problems with AWS – part 8,Software Licensing,Am I allowed to run this in AWS? OR Why IBM is tanking.

11 problems with AWS – part 9,SLAs,99.95% is not 99.999%

11 problems with AWS – part 10,Cloud Skills,Pfft! Cloud skills! We did this 30 years ago on the mainframe!

11 problems with AWS – part 11,Other Financial things,Talk to your accountant about tax and insurance… urgh!

[/table]Enjoy and share and let me know what I’ve missed.